How many functions are there from () to ()? (Updated)

I was recently looking at some of Paul Taylor's writings on what he calls Abstract Stone Duality. It's partly another approach to 'topologising' computer science, independently of Escardo's work. I'm not yet in a position to say much about what he does, except that it gives a neat new language to talk about computable continuous functions without having to build things up from Set Theory. But just for fun I want to look, out of context, at one teeny weeny little thing that he mentions, the Sierpinski space. If you've not met general topology before, it can be a tough topic to grasp. So what I want to do is look in detail at how we might think about a really simple seeming problem from a topological perspective.

Haskell defines a type called (). There is one value of type (), confusingly called (). Most of this post is about functions of the type () -> (). You'd imagine there couldn't be much to say. But this topic is much more complicated than some people might imagine.

How many functions of type () -> () are there?

(Before proceeding, the character ⊥ should look like _|_. Apologies if your font/browser makes it looks like something else.)

Let's start with the obvious function of this type.

It seems like this is the only possible function. The argument can only be () and the result can only be (). What other choices could we make?

Well here's another implementation:

It certainly looks different, but it still just maps () to (). So it appears that f1 and f2 are the same. But there is a way to tell them apart. Make the following definition:

Attempting to evaluate loop sends Haskell into an infinite loop. Either you'll get an error message, or your computer will loop forever, never giving a result. Similarly, evaluating f1 loop will also fail to terminate with a sensible result. But f2 loop terminates fine and returns (). Because Haskell is a lazy language, f2 doesn't need to evaluate its argument before giving its result. f1, on the other hand, returns its argument, so looking at the result causes non-termination. So amazingly, f1 and f2 are different. We have at least two distinct functions of type () -> (). Are there any more?

We can treat a non-terminating function as if it returns a special value called ⊥ (pronounced bottom). So we can summarise the above in a kind of truth table:

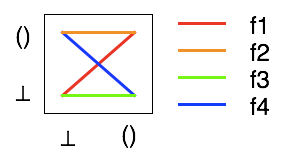

That immediately suggests two more functions, f3 and f4, which are also illustrated.

So it now looks like there are four functions of type () -> (). Here's a possible implementation of f3:

f3 is simply a function that deliberately sabotages itself. But f4 is the interesting one. In order to have f4 ⊥ = (), f4 must ignore its argument so that it doesn't get caught in a quagmire of non-termination. But if it ignores its argument, then it has to ignore its argument when f4 () is evaluated. So f4 () must also equal (). In other words, f4 cannot be implemented. We have only three functions of type () -> ().

So how can we characterise these functions mathematically? Haskell functions don't correspond to mathematical functions from the set {()} to {()}. If we think of () as a set with two elements, {(),⊥}, then Haskell functions still don't correspond to functions from this set to itself.

Let's give the set {⊥,()} a name, S. One approach to formalising Haskell functions is to impose an ordering on S. Here's one:

⊥<=⊥

⊥<=()

()<=()

(Now you can see why ⊥ is called "bottom". If you think of <= as a ranking, ⊥ is at the bottom of the heap.) A monotone function is defined to be a function f such that if x<=y then f(x)<=f(y). If we plot a 'graph' of f with ⊥ and () along the axes, monotone functions are the ones that don't descend as you go from left to right:

The possible Haskell functions correspond precisely to the monotone functions. (Mathematicians would probably call these functions monotonically increasing but the computer science literature I have just seems to call them monotone).

The possible Haskell functions correspond precisely to the monotone functions. (Mathematicians would probably call these functions monotonically increasing but the computer science literature I have just seems to call them monotone).

Another useful definition is the notion of a strict function. This is one that maps ⊥ to ⊥ and so goes through the 'origin' on the graph above. In a strict language you can't implement f2.

But maybe you can think of another possible implementation of a function of type () -> ():

When working with fully lazy evaluation this is indistinguishable from f3 so we can ignore it. But in a strict language we can distinguish f3 and f5. In Haskell, for example, we can use the function seq to force evaluation of its first argument before moving onto its second. f3 `seq` () and f5 `seq` () are distinguishable because the first evaluates to () but the second gives ⊥.

So the answer to the original question is (I think):

* 1 in a total language or "in mathematics"

* 3 in a lazy language like Haskell when working completely lazily

* 4 in Haskell when using seq to enforce strictness

* 3 in a strict language like Ocaml

You'd think I'd have exhausted everything that there is to say by now. But there's a whole lot more. But before I get to it I need to talk a bit about topology in my next post.

And I'm completely ignoring the philosophical issue of what ⊥ means when you can't tell the difference between an answer of ⊥ and a slow computer taking longer than you expect to compute ().

Update: This problem was trickier than I originally anticipated. The above text incorporates a couple of changes based on comments I received.

Haskell defines a type called (). There is one value of type (), confusingly called (). Most of this post is about functions of the type () -> (). You'd imagine there couldn't be much to say. But this topic is much more complicated than some people might imagine.

How many functions of type () -> () are there?

(Before proceeding, the character ⊥ should look like _|_. Apologies if your font/browser makes it looks like something else.)

Let's start with the obvious function of this type.

> f1 x = x

It seems like this is the only possible function. The argument can only be () and the result can only be (). What other choices could we make?

Well here's another implementation:

> f2 _ = ()

It certainly looks different, but it still just maps () to (). So it appears that f1 and f2 are the same. But there is a way to tell them apart. Make the following definition:

> loop = loop

Attempting to evaluate loop sends Haskell into an infinite loop. Either you'll get an error message, or your computer will loop forever, never giving a result. Similarly, evaluating f1 loop will also fail to terminate with a sensible result. But f2 loop terminates fine and returns (). Because Haskell is a lazy language, f2 doesn't need to evaluate its argument before giving its result. f1, on the other hand, returns its argument, so looking at the result causes non-termination. So amazingly, f1 and f2 are different. We have at least two distinct functions of type () -> (). Are there any more?

We can treat a non-terminating function as if it returns a special value called ⊥ (pronounced bottom). So we can summarise the above in a kind of truth table:

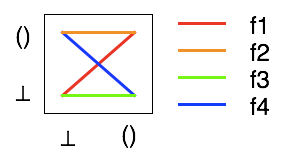

That immediately suggests two more functions, f3 and f4, which are also illustrated.

So it now looks like there are four functions of type () -> (). Here's a possible implementation of f3:

f3 _ = loop

f3 is simply a function that deliberately sabotages itself. But f4 is the interesting one. In order to have f4 ⊥ = (), f4 must ignore its argument so that it doesn't get caught in a quagmire of non-termination. But if it ignores its argument, then it has to ignore its argument when f4 () is evaluated. So f4 () must also equal (). In other words, f4 cannot be implemented. We have only three functions of type () -> ().

So how can we characterise these functions mathematically? Haskell functions don't correspond to mathematical functions from the set {()} to {()}. If we think of () as a set with two elements, {(),⊥}, then Haskell functions still don't correspond to functions from this set to itself.

Let's give the set {⊥,()} a name, S. One approach to formalising Haskell functions is to impose an ordering on S. Here's one:

⊥<=⊥

⊥<=()

()<=()

(Now you can see why ⊥ is called "bottom". If you think of <= as a ranking, ⊥ is at the bottom of the heap.) A monotone function is defined to be a function f such that if x<=y then f(x)<=f(y). If we plot a 'graph' of f with ⊥ and () along the axes, monotone functions are the ones that don't descend as you go from left to right:

The possible Haskell functions correspond precisely to the monotone functions. (Mathematicians would probably call these functions monotonically increasing but the computer science literature I have just seems to call them monotone).

The possible Haskell functions correspond precisely to the monotone functions. (Mathematicians would probably call these functions monotonically increasing but the computer science literature I have just seems to call them monotone).Another useful definition is the notion of a strict function. This is one that maps ⊥ to ⊥ and so goes through the 'origin' on the graph above. In a strict language you can't implement f2.

But maybe you can think of another possible implementation of a function of type () -> ():

> f5 = loop :: () -> ()

When working with fully lazy evaluation this is indistinguishable from f3 so we can ignore it. But in a strict language we can distinguish f3 and f5. In Haskell, for example, we can use the function seq to force evaluation of its first argument before moving onto its second. f3 `seq` () and f5 `seq` () are distinguishable because the first evaluates to () but the second gives ⊥.

So the answer to the original question is (I think):

* 1 in a total language or "in mathematics"

* 3 in a lazy language like Haskell when working completely lazily

* 4 in Haskell when using seq to enforce strictness

* 3 in a strict language like Ocaml

You'd think I'd have exhausted everything that there is to say by now. But there's a whole lot more. But before I get to it I need to talk a bit about topology in my next post.

And I'm completely ignoring the philosophical issue of what ⊥ means when you can't tell the difference between an answer of ⊥ and a slow computer taking longer than you expect to compute ().

Update: This problem was trickier than I originally anticipated. The above text incorporates a couple of changes based on comments I received.

19 Comments:

I'm confused about your f1. Did you mean to define it as "f1 x = ()"?

Ah, never mind. I get it now.

f3 and f5 are not indistinguishable.

f3 `seq` () = ()

f5 `seq` () = _|_

I was a bit puzzled at first, but now it seems natural.

There may be a point in making the distinction between a function and a procedure, as per Brian Harvey in Berkeley CS 61A.

A function is the mathematical thing, while a procedure is the thing (i.e. code) that describes how to compute a function. You may have several procedures that compute the same function, or you may have a function for which there is no procedure to compute it.

Now if you think in these terms, you are simply describing three different procedures, which is not at all weird. The procedures f1 and f2 are not the same because in the first case (f1) you ask Haskell to actually evaluate the argument (even though, per haskell semantics, the actually evaluation of the argument will happen sometime in the future, when you actually need it).

And the third procedure is nothing more than an infinite loop.

The type system of Haskell (or any other practical language) doesn't guarantee that a procedure that has type say t1 -> t2 actually returns a value of type t2, but it guarantees that *if the evaluation ends* the resulting value will be of the t2.

Hmm. Nice food for thought.

To turn your post inside out, we might say that "picking a category in which types correspond to objects and values to arrows is a fun challenge". Since f4 is not decidable, we want to choose a category that has no arrow for f4.

The category of sets and functions doesn't work because f4 is a perfectly good set-function; fortunately, the category Dcpo of complete partial orders and directed-suprema-preserving functions looks more promising - it seems to have exactly the arrows we need for for all the values we think should exist in the type of functions from S -> S.

In particular, f1, f2, and f3 are all included but f4 is excluded because it fails to commute with sup; i.e. f4( sup {_|_, ()} ) != sup f4({_|_, ()}).

Next, if we wanted strict functions, we should require that our arrows be continuous (not just sup-preserving). This would rule out f2.

Finally, total functions are boring here because there's exactly one set-function from {()} -> {()} and it's definitely continuous.

What other properties make it seem that the category of Dcpos w/ sup-preserving functions as arrows is a hospitable place for Haskellites?

luke,

Good point.My answer to that is that if we're using seq then we've stepped out of the pure lazy component of Haskell and we're playing by yet another set of rules. I'll have to think about how that fits into the grand scheme and clarify what I say.

Great post! Looking forward to more...

I've been toying for awhile with the idea of an actual introduction to Haskell along these lines. "A Very Abstract Approach to Haskell" or something similar. It would be a very weird introduction, I guess, but there's more than enough normal ones by now. I have the idea of starting with 1 and 0 (unit and bottom) and building types-first from there, in a (literally) logical fashion. Anyway I find this kind of stuff edifying, fun and inspiring all at once. Keep it up!

And finally, to Michael, we should certainly say something about the existence of certain fixed points, and maybe even that least and greatest fixed points coincide? This leads to a bunch of other requirements that dcpo "just happens" to satisfy, doesn't it? I'm nearly at the end of my knowledge here, though...

This comment has been removed by a blog administrator.

> Now if you think in these terms, you are simply describing three different procedure

But Haskell 'procedures' look a lot like functions in so many ways. So it's nice to try to find a mathematical formulation of what a procedure is so that looks like a function. And that's what this post is about.

> So it's nice to try to find a mathematical formulation of what a procedure is so that looks like a function. And that's what this post is about.

The point was that functions are only mathematical entities. Even if the programming language is a "functional" one, the term "function" is overloaded to mean "procedure" (well, not to be confused with Pascal procedures).

That is, even if the notation is mathematical (which can be very convenient), it is only a description of a way to mechanically compute the function.

I agree that f1 and f2 compute the function you want, which is the only function f : () -> (), but f3 doesn't, because it doesn't terminate.

The problem with f1 is the way you call it. It is not f1 that gets into an infinite loop, but the evaluation of its argument.

That's a nice post, Dan.

To go on a little further, "Tis a maxim tremendous, but trite" (Lewis Carroll) that any funtion f: () -> () is determined by its values at _|_ and (). This means that

f x = f_|_ or x and f()

(please excuse my lack of fluency in Haskell).

This equation is known as the Phoa Principle,

after Wesley Phoa, who wrote one of the first theses on Synthetic Domain Theory in c1990 under the supervision of Martin Hyland in Cambridge. However, Wes is now busy making lots of money.

Anyway, it turns out that this very simple equation makes the difference between mere lambda calculus and a theory of topology.

On the other hand, some "real mathematicians" may think that this page is entirely about empty set theory. There is another space with a non-Hausdorff topology that plays an import role in real analysis, called the ascending reals. This is in many ways similar to the Sierpinski space.

For an introduction to it, please see

here.

Your definition of monotone is deviant: a monotone function is one that does not change direction, no more no less: it can be monotonely increasing or monotonely decreasing. Furthermore with just 2 points all functions are monotone anyhow.

Your functions fx remind me my domains and category when we where trying to find models for PCF, parallel PCF, the definition of parallel-or...

Haaa.

Now all you need to do is add

amb :: () -> () -> ()

amb p q executes p and q in parallel, and terminates just as soon as either one of p and q terminates

Note that with amb, it's possible to execute an infinite number of expressions in parallel to see whether at least one of them terminates. You just give half the processor time to the first, one quarter to the second, one eighth to the third etc. But one cannot verify that they all terminate, one can only do that for a finite number of expressions.

Hmm, logical "or" over infinite sets, logical "and" over finite sets. Smells like some kind of topological space...

Lot of useful feedback. When I get a moment I'll add some corrections in response. This really is a minefield of a topic even though it seems to trivial!

Olivier,

My definition of monotone appears to agree with the computer science literature I have at home. But I agree, it's different from what a mathematician would say.

Great post, I never realized the complexity in such simple functions. But I managed to write an f4:

import System.IO.Unsafe

import Control.Exception

f4 x = unsafePerformIO $ Control.Exception.catch (evaluate x >> return loop) (\_ -> return ())

f4 loop does equal (), but f4 () will throw an exception (usual bottom behaviour) only if loop has already been evaluated, otherwise it goes into an infinite loop and never returns. Your f3 has this strange behaviour as well...but I guess they are both _|_?

I guess you can safely remove the line:

* 3 in a lazy language like Haskell when working completely lazily

So it looks more consistent. Because even we use `seq', Haskell is still a lazy language. It doesn't change at all with the way we use it. _|_ is an inhabitant of ()->(), just like _|_ is an inhabitant of any type.

yin,

In Haskell, every type has a _|_. But in a completely lazy context, \x -> _|_ is indistinguishable from _|_. So I do mean 3, not 4.

Post a Comment

<< Home