Finite Differences of Types

Finite Differences of Real-Valued Functions

Conor McBride's discovery that you can differentiate container types to get useful constructions like zippers has to be one of the most amazing things I've seen in computer science. But seeing the success of differentiation suggests the idea of taking a step back and looking at finite differences.

Forget about types for the moment and consider functions on a field R. Given a function f:R→R we can define Δf:R×R→R by

Δf(x,y) = (f(x)-f(y))/(x-y)

Δ is the finite difference operator. But does it make any kind of sense for types? At first it seems not because we can't define subtraction and division of types. Can we massage this definition into a form that uses only addition and multiplication?

First consider Δc where c is a constant function. Then Δc(x,y)=0.

Now consider the identity function i(x)=x. Then Δi(x,y)=1.

Δ is linear in the sense that if f and g are functions, Δ(f+g) = Δf+Δg.

Now consider the product of two functions, f and g.

Δ(fg)(x,y) = (f(x)g(x)-f(y)g(y))/(x-y)

= (f(x)g(x)-f(x)g(y)+f(x)g(y)-f(y)g(y))/(x-y)

= f(x)Δg(x,y)+g(y)Δf(x,y)

So now we have a Leibniz-like rule. We can compute finite differences of polynomials without using subtraction or division! What's more, we can use these formulae to difference algebraic functions defined implicitly by polynomials. For example consider f(x)=1/(1-x). We can rewrite this implicitly, using only addition and multiplication, as

f(x) = 1+x f(x)

Differencing both sides we get

Δf(x,y) = x Δf(x,y)+f(y)

That tells us that Δf(x,y) = f(x)f(y).

Finite Differences of Types

We're now ready to apply our operator to types. Instead of functions on the reals we work with functors on the set of types. A good first example container is the functor F(X)=XN for an integer N. This is basically just an array of N elements of type X. We could apply the Leibniz rule repeatedly, but we expect to get the same result as if we'd worked over the reals. So setting f(x)=xN we get

Δf(x,y) = (xN-yN)/(x-y) = xN-1+xN-2y+xN-3y2+...+yN-1

So we know that on types, ΔF(X,Y) = XN-1+XN-2Y+...+YN-1.

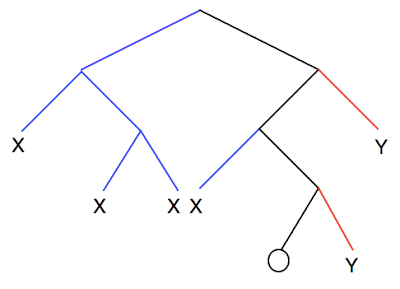

There's a straightforward interpretation we can give this. Differentiating a type makes a hole in it. Finite differencing makes a hole in it, but everything to the left of the hole is of one type and everything on the right is another. For example, for F(X)=X3, ΔF(X,Y)=X2+XY+Y2 can be drawn as:

If you've been reading the right papers then at this point it should all become familiar. Finite differencing is none other than dissection, as described by Conor in his Jokers and Clowns paper. I don't know if he was aware that he was talking about finite differences - the paper itself talks about this being a type of derivative. It's sort of implicit when he writes the isomorphism:

right :: p j + (Δp c j , c) → (j , Δp c j ) + p c

With a little rearrangement this becomes the definition of finite difference.

Now that we've recognised dissection as finite difference we can reason informally about dissection using high school algebra. For example, we already know that lists, defined by L(X) = 1+X L(X) can be informally thought of as L(X)=1/(1-X). So using the example I gave above we see that ΔL(X,Y)=1/((1-X)(1-Y)) = L(X)L(Y). So the dissection of a list is a pair of lists, one for the left elements, and one for the right elements. Just what we'd expect.

Another example. Consider the trees defined by T(X)=X+T(X)2. Informally we can interpret this as T(X)=(1+√(1-4X))/2. A little algebraic manipulation, using (√x-√y)(√x+√y) = x-y shows that

ΔT(X,Y) = 1/(1-(T(X)+T(Y))

In other words, a dissection of a tree is a list of trees, each of which is a tree of X or a tree of Y. This corresponds to the fact that if you dissect a tree at some element, and then follow the path from the root to the hole left behind, then all of the left branches (in blue) are trees of type X and all of the right branches (in red) are trees of type Y.

If you're geometrically inclined then you can think of types with holes in them as being a kind of tangent to the space of types. Along those lines, dissections become secants. I think this geometric analogy can be taken a lot further and that in fact a non-trivial piece of differential geometry can be made to work with types. But that's for another day.

Oh, I almost forgot. Derivatives are what you get when you compute finite differences for points really close to each other. So I hope you can see that Δf(x,x)=df/dx giving us holes in terms of dissections. Conor mentions this in his paper.

We should also be able to use this approach to compute finite differences in other algebraic structures that don't have subtraction or division.

I can leave you with some exercises:

1. What does finite differencing mean when applied to both ordinary and exponential generating functions?

2. Can you derive the "chain rule" for finite differences? This can be useful when you compute dissections of types defined by sets of mutually recursive definitions.

3. Why is

right, defined above, a massaged version of the definition of finite difference? (Hint: define d=((f(x)-f(y))/(x-y). In this equation, eliminate the division by a suitable multiplication and eliminate the subtraction by a suitable addition. And remember that (,) is Haskell notation for the product of types.)

23 Comments:

You should investigate interval arithmetic and interval constraints. Interval constraints are used to deal with the explosive ranges that accumulate with interval arithmetic. This is relevant to floating point numbers so you can have very accurate calculations (bounded by the smallest different floating numbers possible).

e.g. http://webhome.cs.uvic.ca/~bmoa/icsp.ppt

I find finitely differentiating T(X)=X+T(X)^2 directly easier. It becomes ΔT(X,Y) = 1 + (T(X) + T(Y))ΔT(X,Y) which is obviously a list of (T(X) + T(Y)).

One typo: "That tells us that Δf(x,y) = 1/(f(x)f(y))." I think you got 2 formulas confused here.

I just did the tree example to show it works even for algebraic functions like square root, not to show it's easier :-) But in general, I find thinking about dissection as finite differences does make it a lot easier.

Okay - so categorically this is requiring finite products, finite sums (and exponentials for your X^N example)?

What I'd be very curious about is how well this generalizes to categories with less structure - monoidal instead of cartesian, for instance.

Permutations and combinations crossed my mind.

I think that

(f(x)g(x)-f(x)g(y)+f(x)g(y)-f(y)g(y)/(x-y)

is missing a paren before the /.

(f(x)g(x)-f(x)g(y)+f(x)g(y)-f(y)g(y))/(x-y)

Creighton,

No exponentials needed here as N is an integer.

With a bit of trickery I did once sort of get exponentials going with derivatives. But I don't think anything similar will work for finite differences and exponentials.

You refer to X^N as the type of arrays of elements of X of length N, but shouldn't they be thought of instead as N-tuples? (I guess it's a minor difference in terminology, but I think of ‘array’ as specifically connoting extensibility, with some sort of push/pull operation.)

What about second order dissectioning?

F.e. Δ^2(FG)(X,Y,Z) = F(X)Δ^2G(X,Y,Z) + ΔF(X,Y)ΔG(Y,Z) + Δ^2F(X,Y,Z)G(Z)

(I.e. both holes in G, one hole in F and one in G or both holes in F.)

I couldn't manage to derive this from second order finite difference. But maybe I did something wrong.

Ah, I forgot that you can choose which hole goes to F and which to G.

So: Δ^2(FG)(X,Y,Z) = F(X)Δ^2G(X,Y,Z) + 2ΔF(X,Y)ΔG(Y,Z) + Δ^2F(X,Y,Z)G(Z)

This can be derived from Δ^2(f)(x,y,z) = (f(x) - 2f(y) + f(z)) / (x - y) / (y - z)

Perhaps my question makes no sense, but what would it mean to define the "exponential" type as the type that is equal to its own derivative?

Or, can you do "type" differential equations?

Still doesn't seem right. Can't get the chain rule to work. Looking at the holes it should be something like:

Δ^2(FG)(X,Y,Z) = Δ^2G(X,Y,Z)DF(G(X),G(Z)) + DG(X,Y)DG(Y,Z)Δ^2F(G(X),G(Y),G(Z))

(I.e. the 2 holes in the same part of F or in different parts of F)

Sjoerd, there's no need for second differences in the chain rule. We want the first difference of the composition of two functions:

Informally:

Δ(F o G)(X,Y)

=(F(G(X))-F(G(Y)))/(X-Y)

=(F(G(X)-F(G(Y))/(G(X)-G(Y)) . (G(X)-G(Y))/(X-Y)

= ΔF(G(X),G(Y)) . ΔG(X,Y)

That can now be verified using Conor's definition of dissection. When Y=X we get the usual chain rule.

I think this would have been tricky to derive without seeing dissection as finite difference.

I was specifically going for the second difference of function composition, i.e. the difference equivalent of (fg)''(x) = f''(g(x))g^2(x) + g''(x)f'(g(x))

The problem is that I'm not sure about what the formula of second order difference should be exactly.

But I agree that having the difference view makes things easier.

I derived the first difference by guessing what the difference equivalent of the chain rule would be, and confirming the result by filling in the difference formulas.

Conor will probably agree as well, as the C&J paper says: "Of course, what’s missing here is a more semantic characterisation of dissection, with respect to which the operational rules for /|\ p may be justified."

Sjoerd,

Sounds like you're trying to find a Faa di Bruno style formula. I think there may be a nice way of approaching that but I have no time right now...

Hey Dan,

i forget did you already post on the zipper-comonad connection to spell out the connection between differentiation and comonads?

Best wishes,

--greg

leihaus,

I just talked about the easy case of an N-element array here. Otherwise it's all in Conor's papers.

See Differential Logic and refs therein.

Jon,

Interesting! That looks remarkably like a side project of mine. Unfortunately I can't read the web site right now because despite following lots of instructions I've never managed to make the fonts work for the n-category cafe under Linux. I'll see how it looks under MacOSX tonight.

There's another version here.

I'm really new to this stuff, but I find it fascinating. I'm a bit confused however: what does it mean to add two types together? i.e. it makes sense to me that X^2 is (X,X), but I don't understand, for example, why a list of Xs has type L(X)=1+X L(X). Do we just think of + as a form of union? Could you recommend a reference on this stuff?

Thanks! Duncan

Duncan,

You could try here if you're not a Haskell programmer (yet). '+' is disjoint union. Another place is here.

"I think this geometric analogy can be taken a lot further and that in fact a non-trivial piece of differential geometry can be made to work with types"

care to spill the beans on what you're thinking of? =)

Post a Comment

<< Home