Beyond Regular Expressions: More Incremental String Matching

In my last post I showed how to incrementally match long strings against regular expressions. I now want to apply similar methods to matching languages that can't be described by regular expressions. (Note that 'language' is just jargon for a set of strings that meet some criterion.) In particular, regular expressions can't be used to test a string for balanced parentheses. This is because we need some kind of mechanism to count how many open parentheses are still pending and a finite state machine can't represent arbitrary integers.

So let's start with a slightly more abstract description of what was going on last time so we can see the bigger picture. We were storing strings in balanced trees with a kind of 'measurement' or 'digest' of the string stored in the nodes of the tree. Each character mapped to an element of a monoid via a function called measure and you can think of the measurement function as acting on entire strings if you mappend together all of the measurements for each of the characters. So what we have is a function f :: String -> M taking strings to some type M (in the last post M was a type of array) with the properties

By noticing that String is itself a monoid we can write this as

Anything satisfying these laws is called a monoid homomorphism, or just homomorphism for short.

So the technique I used worked like this: I found a homomorphism from String to some type with the useful property that for any string s, f s still contains all the information required to figure out if we're dealing with a member of our language. If f turns a string into something more efficient to work with then we can make our string matching more efficient.

Now I want to make the notion of "contains all the information required" more precise by considering an example. Consider strings that consist only of the characters ( and ). Our language will be the set of strings whose parentheses balance. In other words the total number of ( must match the total number of ), and as we scan from left to right we must never see more ) than (. For example, ()()() and ((()))() are in our language, but )()()( isn't. This language is called the Dyck language.

Suppose we're testing whether or not some string is in the Dyck language. If we see () as a substring then if we delete it from the string, it makes no difference to whether or not the string is in the Dyck language. In fact, if we see (()), ((())), (((()))) and so on they can all be deleted. On the other hand, you can't delete )( without knowing about the rest of the string. Deleting it from ()() makes no difference to its membership in the Dyck language, but deleting it from )(() certainly does.

So given a language L, we can say that two strings, x and y, are interchangeable with respect to L if any time we see x as a substring of another string we can replace it with y, and vice versa, without making any difference to whether the string is in the language. Interchangeable strings are a kind of waste of memory. If we're testing for membership of L there's no need to distinguish between them. So we'd like our measurement homomorphism to map all interchangeable strings to the same values. But we don't want to map any more strings to the same value because then we lose information that tells us if a string is an element of L. A homomorphism that strikes this balance perfectly is called the 'canonical homomorphism' and the image of the set of all strings under this homomorphisms is called the syntactic monoid. By 'image', I simply mean all the possible values that could arise from applying the homomorphism to all possible strings.

So lets go back to the Dyck language. Any time we see () we can delete it. But if we delete every occurence of () from a string then all we have left is a bunch of ) followed by a bunch of (. Let's say it's p of the former, and q of the latter. Every string of parentheses can be distilled down to a pair of integers ≥0, (p,q). But does this go far enough? Could we distill any further? Well for any choice of (p,q) it's a good exercise to see that for any other choice of (p',q') there's always a string in the Dyck language where if you have )p(q as a substring, replacing it with (p',q') gives you something not in the language. So you can't distill any further. Which means we have our syntactic monoid and canonical homomorphism. In this case the monoid is called the bicyclic monoid and we can implement it as follows:

Where did that code for mappend come from? Consider )a(b)c(d. We can delete () from the middle many times over.

Now we can more or less reproduce the code of last week and get a Dyck language tester. Once we've distilled a string down to (p,q) we only need to test whether or not p=q=0 to see whether or not our parentheses are balanced:

There's a completely different way to test membership of the Dyck language. Replace each ( with 1 and ) with -1. Now scan from left to right keeping track of (1) the sum of all the numbers so far and (2) the minimum value taken by this sum. If the final sum and the final minimal sum are zero, then we have matching parentheses. But we need to do this on substrings without scanning from the beginning in one go. That's an example of a parallel prefix sum problem and it's what I talked about here.

So here's an extended exercise: adapt the parallel prefix sum approach to implement incremental Dyck language testing with fingertrees. You should end up with a canonical homomorphism that's similar to the one above. It'll probably be slightly different but ultimately equivalent.

And here's an even more extended exercise: protein sequences are sequences from a 20 letter alphabet. Each letter can be assigned a hydrophobicity value from certain tables. (Pick whichever table you want.) The hydrophobicity of a string is the sum of the hydrophobicities of its letters. Given a string, we can give it a score corresponding to the largest hydrophobicity of any contiguous substring in it. Use fingertrees and a suitable monoid to track this score as the string is incrementally edited. Note how widely separated substrings can suddenly combine together as stuff between them is adjusted.

If you're interested in Dyck languages with multiple types of parenthesis that need to match you need something much more fiendish.

So let's start with a slightly more abstract description of what was going on last time so we can see the bigger picture. We were storing strings in balanced trees with a kind of 'measurement' or 'digest' of the string stored in the nodes of the tree. Each character mapped to an element of a monoid via a function called measure and you can think of the measurement function as acting on entire strings if you mappend together all of the measurements for each of the characters. So what we have is a function f :: String -> M taking strings to some type M (in the last post M was a type of array) with the properties

f (a ++ b) == f a `mappend` f b

f [] == mempty

By noticing that String is itself a monoid we can write this as

f (a `mappend` b) == f a `mappend` f b

f mempty == mempty

Anything satisfying these laws is called a monoid homomorphism, or just homomorphism for short.

So the technique I used worked like this: I found a homomorphism from String to some type with the useful property that for any string s, f s still contains all the information required to figure out if we're dealing with a member of our language. If f turns a string into something more efficient to work with then we can make our string matching more efficient.

Now I want to make the notion of "contains all the information required" more precise by considering an example. Consider strings that consist only of the characters ( and ). Our language will be the set of strings whose parentheses balance. In other words the total number of ( must match the total number of ), and as we scan from left to right we must never see more ) than (. For example, ()()() and ((()))() are in our language, but )()()( isn't. This language is called the Dyck language.

Suppose we're testing whether or not some string is in the Dyck language. If we see () as a substring then if we delete it from the string, it makes no difference to whether or not the string is in the Dyck language. In fact, if we see (()), ((())), (((()))) and so on they can all be deleted. On the other hand, you can't delete )( without knowing about the rest of the string. Deleting it from ()() makes no difference to its membership in the Dyck language, but deleting it from )(() certainly does.

So given a language L, we can say that two strings, x and y, are interchangeable with respect to L if any time we see x as a substring of another string we can replace it with y, and vice versa, without making any difference to whether the string is in the language. Interchangeable strings are a kind of waste of memory. If we're testing for membership of L there's no need to distinguish between them. So we'd like our measurement homomorphism to map all interchangeable strings to the same values. But we don't want to map any more strings to the same value because then we lose information that tells us if a string is an element of L. A homomorphism that strikes this balance perfectly is called the 'canonical homomorphism' and the image of the set of all strings under this homomorphisms is called the syntactic monoid. By 'image', I simply mean all the possible values that could arise from applying the homomorphism to all possible strings.

So lets go back to the Dyck language. Any time we see () we can delete it. But if we delete every occurence of () from a string then all we have left is a bunch of ) followed by a bunch of (. Let's say it's p of the former, and q of the latter. Every string of parentheses can be distilled down to a pair of integers ≥0, (p,q). But does this go far enough? Could we distill any further? Well for any choice of (p,q) it's a good exercise to see that for any other choice of (p',q') there's always a string in the Dyck language where if you have )p(q as a substring, replacing it with (p',q') gives you something not in the language. So you can't distill any further. Which means we have our syntactic monoid and canonical homomorphism. In this case the monoid is called the bicyclic monoid and we can implement it as follows:

> {-# LANGUAGE TypeSynonymInstances,FlexibleInstances,MultiParamTypeClasses #-}

> import Data.Foldable

> import Data.Monoid

> import Data.FingerTree hiding (fromList)

> import qualified Data.List as L

> data Bicyclic = B Int Int deriving (Eq,Show)

> hom '(' = B 0 1

> hom ')' = B 1 0

> instance Monoid Bicyclic where

> mempty = B 0 0

> B a b `mappend` B c d = B (a-b+max b c) (d-c+max b c)

Where did that code for mappend come from? Consider )a(b)c(d. We can delete () from the middle many times over.

Now we can more or less reproduce the code of last week and get a Dyck language tester. Once we've distilled a string down to (p,q) we only need to test whether or not p=q=0 to see whether or not our parentheses are balanced:

> matches' s = x==B 0 0 where

> x = mconcat (map hom s)

> data Elem a = Elem { getElem :: a } deriving Show

> data Size = Size { getSize :: Int } deriving (Eq,Ord,Show)

> instance Monoid Size where

> mempty = Size 0

> Size m `mappend` Size n = Size (m+n)

> instance Measured (Size,Bicyclic) (Elem Char) where

> measure (Elem a) = (Size 1,hom a)

> type FingerString = FingerTree (Size,Bicyclic) (Elem Char)

> insert :: Int -> Char -> FingerString -> FingerString

> insert i c z = l >< (Elem c <| r) where (l,r) = split (\(Size n,_) -> n>i) z

> string = empty :: FingerString

> matchesDyck string = snd (measure string)==B 0 0

> loop string = do

> print $ map getElem (toList string)

> print $ "matches? " ++ show (matchesDyck string)

> print "(Position,Character)"

> r <- getLine

> let (i,c) = read r

> loop $ insert i c string

> main = do

> loop string

There's a completely different way to test membership of the Dyck language. Replace each ( with 1 and ) with -1. Now scan from left to right keeping track of (1) the sum of all the numbers so far and (2) the minimum value taken by this sum. If the final sum and the final minimal sum are zero, then we have matching parentheses. But we need to do this on substrings without scanning from the beginning in one go. That's an example of a parallel prefix sum problem and it's what I talked about here.

So here's an extended exercise: adapt the parallel prefix sum approach to implement incremental Dyck language testing with fingertrees. You should end up with a canonical homomorphism that's similar to the one above. It'll probably be slightly different but ultimately equivalent.

And here's an even more extended exercise: protein sequences are sequences from a 20 letter alphabet. Each letter can be assigned a hydrophobicity value from certain tables. (Pick whichever table you want.) The hydrophobicity of a string is the sum of the hydrophobicities of its letters. Given a string, we can give it a score corresponding to the largest hydrophobicity of any contiguous substring in it. Use fingertrees and a suitable monoid to track this score as the string is incrementally edited. Note how widely separated substrings can suddenly combine together as stuff between them is adjusted.

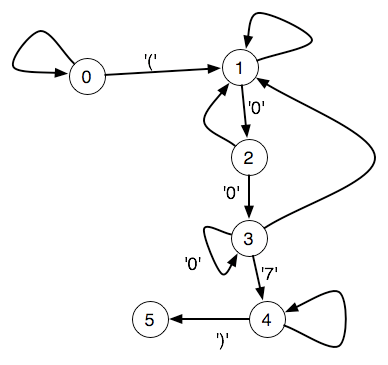

If you're interested in Dyck languages with multiple types of parenthesis that need to match you need something much more fiendish.