What is Topology?

Of all the branches of mathematics I have studied, Topology was the one with the biggest mismatch between what I expected, and how it actually was. Eventually I reached Algebraic Topology, and that is all about the things you expect: Mobius strips, Klein bottles, pairs of pants, knots and handlebodies. But the subject starts with the machinery of Point Set Topology, and in particular with a set of axioms that can seem highly unmotivated. So I’m going to describe Topology in a way that is completely different from what you’ll find in any Topology textbook I know, but which does draw on published ideas in Computer Science. Nonetheless, I am describing standard off-the-shelf point set topology, just dressing it with different intuitions.

Suppose I have some set X and a (possibly infinite) collection of machines. To each machine M is associated a subset of X, U. The idea is that given an element x of X, you can use the machine M to test to see if x is an element of U. The catch is that the machine can only reply “yes”, and that you don’t know how long to wait for the answer, only that if the answer is “yes”, the machine will eventually, after a finite time, give you an answer. If x isn’t in U, the machine sits there forever, neither saying “yes” nor “no”. We’ll call a set V “observable” if for every x in V, we can use a machine, or some combination of machines, to show (in a finite time of course) that x is in V. So all of the U’s associated to machines M are observable.

Now suppose we have two machines, M and N, associated with sets U and V respectively. We input x to both machines. If x is in U intersection V then eventually both machines will reply “yes”. We don’t know how long to wait, but we know it’ll be a finite wait. Similarly, if x is in U union V we known that one machine or the other will eventually say “yes”. So U intersection V and U union V are both observable.

Now suppose we have a (possibly infinite) collection of observable sets Ui. Let U be the union of all the Ui. Given any element x of U, it must be a member of one of the Ui. But every Ui is observable, so there is some combination of machines that can prove that x is in Ui. And hence we can show that x is in U. So unions of infinite collections of observable sets are observable.

(Note that in the previous paragraph I didn’t say you had to have an algorithm for saying which Ui you should use, given x. I’m just saying that some Ui must exist. This means I’m not talking about semidecidability. But observability is similar to semidecidability.)

So what are the machines that I’m talking about? Let’s leave that undecided. We can use the above observations to make a definition:

Given a set X, a system of observables is a set of subsets of X, called observable sets, with the properties

So let me fill in with an example. Suppose we have some devices for measuring signed lengths (or, say, x-coordinates in some frame of reference). These lengths take value in the real line, R. If the device measures a length within its observable set it eventually says “yes”, otherwise it hangs until you press reset. Suppose x is in a device’s observable set. Then some mechanism eventually leads to a “yes” response. But if the device is based on reasonable physical principles, it must be possible to tweak x by a tiny amount and still trigger the same mechanism, otherwise the device would, in some sense, be infinitely discriminating around x. So if x is in an observable set U, then the interval [x-e,x+e] must also be in U for some, possibly very small, e. So let’s define a system of observables on the real line by saying U is observable if for every x in it, there is some e such that [x-e,x+e] is in the set. Intuitively, these are sets that are “fuzzy round the edges”. That’s a reasonable property of a real world measuring device.

Suppose we can augment our devices by applying transformations to a value x before applying the machine. For example, if the machine works optically you could imagine applying a magnifying lens to it to improve its accuracy. For a 10x lens, say, we’d apply the function x -> 10x to our point, and then apply a machine M to see if 10x lies in U. More generally, if our transforming function is f, then applying that to x converts our machine into a machine that tests whether x is an element of the set

f-1(U) = {y | f(y) in U}

If f is the product of a physical process, it’s reasonable to expect f to be continuous at all of its arguments. So what does f-1(U) look like for continuous f? Well, by definition, f is continuous at x if for all d there is an e such that |x-x’|<e means |f(x)-f(x’)|<d. So if x is in f-1(U), then f(x) has a small interval around it in U (because by stipulation, U is observable) and so x has a small interval around it in f-1(U) (by continuity of f). In other words, the continuous functions are precisely those functions for which f-1(U) is observable for all observable U, ie. the functions that don't let us observe the unobservable.

So...I’ve talked about observability, and machines, and ways to pre-process the input to these machines. What does any of this have to do with topology? Well, it simply *is* topology. Not a generalisation, or a special case. What I have described is precisely the subject matter of topology. The only difference is that a “system of observables” is normally called a topology and an “observable” set is normally called an open set. And given a topology on a set A, and a topology on a set B, topologists define continuity by saying that f:A->B is continuous if f-1 maps open sets to open sets.

What I’ve tried to do in a few paragraphs is motivate the usual axioms of topology. Usually they are presented as a fait accompli which is justified after the fact by geometrical intuitions. I hope I’ve given a way to look at these axioms that makes them seem natural a priori and that spans both geometrical and computational ideas.

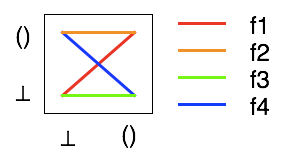

Anyway, now we’re armed with the notion of a topology, I can get back to considering functions from () to ()...

Suppose I have some set X and a (possibly infinite) collection of machines. To each machine M is associated a subset of X, U. The idea is that given an element x of X, you can use the machine M to test to see if x is an element of U. The catch is that the machine can only reply “yes”, and that you don’t know how long to wait for the answer, only that if the answer is “yes”, the machine will eventually, after a finite time, give you an answer. If x isn’t in U, the machine sits there forever, neither saying “yes” nor “no”. We’ll call a set V “observable” if for every x in V, we can use a machine, or some combination of machines, to show (in a finite time of course) that x is in V. So all of the U’s associated to machines M are observable.

Now suppose we have two machines, M and N, associated with sets U and V respectively. We input x to both machines. If x is in U intersection V then eventually both machines will reply “yes”. We don’t know how long to wait, but we know it’ll be a finite wait. Similarly, if x is in U union V we known that one machine or the other will eventually say “yes”. So U intersection V and U union V are both observable.

Now suppose we have a (possibly infinite) collection of observable sets Ui. Let U be the union of all the Ui. Given any element x of U, it must be a member of one of the Ui. But every Ui is observable, so there is some combination of machines that can prove that x is in Ui. And hence we can show that x is in U. So unions of infinite collections of observable sets are observable.

(Note that in the previous paragraph I didn’t say you had to have an algorithm for saying which Ui you should use, given x. I’m just saying that some Ui must exist. This means I’m not talking about semidecidability. But observability is similar to semidecidability.)

So what are the machines that I’m talking about? Let’s leave that undecided. We can use the above observations to make a definition:

Given a set X, a system of observables is a set of subsets of X, called observable sets, with the properties

- X and the empty set are observable

- arbitrary unions of observable sets are observable

- finite intersections of observable sets are observable

So let me fill in with an example. Suppose we have some devices for measuring signed lengths (or, say, x-coordinates in some frame of reference). These lengths take value in the real line, R. If the device measures a length within its observable set it eventually says “yes”, otherwise it hangs until you press reset. Suppose x is in a device’s observable set. Then some mechanism eventually leads to a “yes” response. But if the device is based on reasonable physical principles, it must be possible to tweak x by a tiny amount and still trigger the same mechanism, otherwise the device would, in some sense, be infinitely discriminating around x. So if x is in an observable set U, then the interval [x-e,x+e] must also be in U for some, possibly very small, e. So let’s define a system of observables on the real line by saying U is observable if for every x in it, there is some e such that [x-e,x+e] is in the set. Intuitively, these are sets that are “fuzzy round the edges”. That’s a reasonable property of a real world measuring device.

Suppose we can augment our devices by applying transformations to a value x before applying the machine. For example, if the machine works optically you could imagine applying a magnifying lens to it to improve its accuracy. For a 10x lens, say, we’d apply the function x -> 10x to our point, and then apply a machine M to see if 10x lies in U. More generally, if our transforming function is f, then applying that to x converts our machine into a machine that tests whether x is an element of the set

If f is the product of a physical process, it’s reasonable to expect f to be continuous at all of its arguments. So what does f-1(U) look like for continuous f? Well, by definition, f is continuous at x if for all d there is an e such that |x-x’|<e means |f(x)-f(x’)|<d. So if x is in f-1(U), then f(x) has a small interval around it in U (because by stipulation, U is observable) and so x has a small interval around it in f-1(U) (by continuity of f). In other words, the continuous functions are precisely those functions for which f-1(U) is observable for all observable U, ie. the functions that don't let us observe the unobservable.

So...I’ve talked about observability, and machines, and ways to pre-process the input to these machines. What does any of this have to do with topology? Well, it simply *is* topology. Not a generalisation, or a special case. What I have described is precisely the subject matter of topology. The only difference is that a “system of observables” is normally called a topology and an “observable” set is normally called an open set. And given a topology on a set A, and a topology on a set B, topologists define continuity by saying that f:A->B is continuous if f-1 maps open sets to open sets.

What I’ve tried to do in a few paragraphs is motivate the usual axioms of topology. Usually they are presented as a fait accompli which is justified after the fact by geometrical intuitions. I hope I’ve given a way to look at these axioms that makes them seem natural a priori and that spans both geometrical and computational ideas.

Anyway, now we’re armed with the notion of a topology, I can get back to considering functions from () to ()...