How Telescopes Really Work and What You Can See Through Them

For my birthday a few weeks ago (40!) I received an 8" dobsonian telescope from my wife and her family. Of course the weather here in Northern California suddenly took a turn for the worse immediately after I brought it home, but there have still been a few clear nights.

One of the frustrating things about astronomy is that what you can see with an amateur telescope from your back yard in a well lit city is quite different from what you can see from the wilderness with a telescope the size of a truck. Unfortunately, even a great book like The Backyard Astronomer's Guide is dominated by the latter type of picture (like that fantasic picture of the Andromeda galaxy on its cover). So I thought I'd tell it like it really is and give you an idea of what you can see from my location.

Before that, I want to mention something about telescopes. My telescope is a Newtonian reflector and if you look at the pictures on Wikipedia you'll notice that the secondary mirror forms an obstruction that blocks some of the light entering the telescope. A frequently asked question is "why can't you see the obstruction through the scope"? In fact, if you put your hand in front of the aperture the effect on what you see, if the image is in focus, is negligible, just a little darkening. The explanation is very simple, but nobody seems to phrase it the same as me.

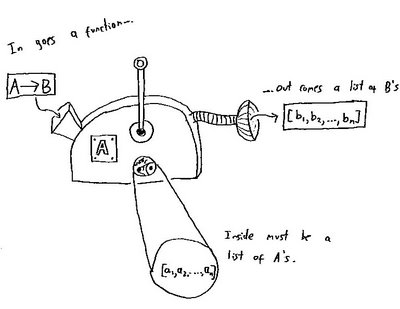

The idea is this: a lens, that's in focus, is a device that converts direction into position. Suppose the telescope is oriented along the x-axis. Think of a ray of light as having the equation y=mx+c. Think of m as the ray direction and c as its position. If that ray passes through a lens or mirror and is projected onto a screen, denote the position at which it arrives on the screen by f(m,c). It's clearly a function of m and c. The crucial point is that for a screen at precisely the focal length away from the lens or mirror, f(m,c) is independent of c. That's the raison d'être of a telescope. All rays with gradient m arrive at the same point. So we end up with a bright image because we can collect lots of rays coming from the same direction. But also, any information about the ray's position, ie. c, has been erased. Information about the shape of the obstruction is positional information contained in c. This has been erased, and hence you can't see the obstruction. In practice you really need to consider primary lens (or mirror), plus eyepiece, plus lens of the eye projecting onto the retina, but the principle is the same. For the more formally inclined, the erasure of c information corresponds to a zero in a transfer matrix.

In brief: a lens or mirror focussed on infinity is a position eraser.

My first two subjects were probably the same as every other amateur astronomer. I started with the Andromeda galaxy. Through binoculars it looks like a faint fuzzy blob. And here's the truth of how it looks through a telescope: it looks like a fuzzy blob, at least from a well lit city. I couldn't make out any kind of structure at all, let alone spiral arms. It didn't even look elliptical, just a circular blob with brightness falling off radially from the centre. I also saw one of its neighbours, M32 or M110. At first I thought that the fuzzy blob was the galaxy and that I needed more magnification to see detail. But now I think that I was seeing just the core of the Andromeda galaxy with the arms filling a wider area but remaining invisible because they're no brighter that the sky in my part of the world.

The Orion nebula, on the other hand, was stunning! In outline, it looked remarkably like the picture at wikipedia, but without the colour. When I switched to using a filter (a narrow bandpass Lumicon UHC filter) it became even clearer. Not only could I clearly see the shape of the nebula but I could also see structure all the way through the cloud. It looked even better after I'd been viewing it for a while, probably as my eyes became better adapted to the dark. This was the best thing I've seen through a telescope ever!

I'll mention the last thing I looked at: γ Andromedae, otherwise known as Almach. It's a binary star system that can't be resolved with the naked eye. I used a wide field of view lens and was disappointed to see that it still looked like a single star. But then I switched eyepieces and I saw it - a beautiful pair of stars, one brighter and yellowy orange and the other deep blue. You read about the colour of stars but it's often a disappointment. A single star on its own tends to look white with a hint of colour because the eye doesn't register colour well in such low light conditions. But when you see two contrasting stars so close together it makes a world of difference. The deep blue of the smaller star was unmistakable and the blue really was astonishingly blue. That must be quite a sight for the γ Andromedans. But I should add that the blue star is in fact a triple star system in its own right, so γ Andromedae is actually a quadruple star system. I was unable to resolve more stars than two (and you can only tell there are four by inference from spectrography, not direct viewing).

I also tried to find the Crab Nebula. I'm 99% sure that I was pointing at the right part of the sky and I did in fact see a faint smudge just at the limits of my perception. I had a jiggle the telescope around a bit just to be sure I wasn't imagining it, but sure enough, it appeared to be attached to the sky, not the scope or my eyes. But it certainly didn't look like the pictures.

Anyway, now you have a better idea of what you can see from a city.

One of the frustrating things about astronomy is that what you can see with an amateur telescope from your back yard in a well lit city is quite different from what you can see from the wilderness with a telescope the size of a truck. Unfortunately, even a great book like The Backyard Astronomer's Guide is dominated by the latter type of picture (like that fantasic picture of the Andromeda galaxy on its cover). So I thought I'd tell it like it really is and give you an idea of what you can see from my location.

How Telescopes Work

Before that, I want to mention something about telescopes. My telescope is a Newtonian reflector and if you look at the pictures on Wikipedia you'll notice that the secondary mirror forms an obstruction that blocks some of the light entering the telescope. A frequently asked question is "why can't you see the obstruction through the scope"? In fact, if you put your hand in front of the aperture the effect on what you see, if the image is in focus, is negligible, just a little darkening. The explanation is very simple, but nobody seems to phrase it the same as me.

The idea is this: a lens, that's in focus, is a device that converts direction into position. Suppose the telescope is oriented along the x-axis. Think of a ray of light as having the equation y=mx+c. Think of m as the ray direction and c as its position. If that ray passes through a lens or mirror and is projected onto a screen, denote the position at which it arrives on the screen by f(m,c). It's clearly a function of m and c. The crucial point is that for a screen at precisely the focal length away from the lens or mirror, f(m,c) is independent of c. That's the raison d'être of a telescope. All rays with gradient m arrive at the same point. So we end up with a bright image because we can collect lots of rays coming from the same direction. But also, any information about the ray's position, ie. c, has been erased. Information about the shape of the obstruction is positional information contained in c. This has been erased, and hence you can't see the obstruction. In practice you really need to consider primary lens (or mirror), plus eyepiece, plus lens of the eye projecting onto the retina, but the principle is the same. For the more formally inclined, the erasure of c information corresponds to a zero in a transfer matrix.

In brief: a lens or mirror focussed on infinity is a position eraser.

And What You Can See Through Them

Andromeda Galaxy

My first two subjects were probably the same as every other amateur astronomer. I started with the Andromeda galaxy. Through binoculars it looks like a faint fuzzy blob. And here's the truth of how it looks through a telescope: it looks like a fuzzy blob, at least from a well lit city. I couldn't make out any kind of structure at all, let alone spiral arms. It didn't even look elliptical, just a circular blob with brightness falling off radially from the centre. I also saw one of its neighbours, M32 or M110. At first I thought that the fuzzy blob was the galaxy and that I needed more magnification to see detail. But now I think that I was seeing just the core of the Andromeda galaxy with the arms filling a wider area but remaining invisible because they're no brighter that the sky in my part of the world.

Orion Nebula

The Orion nebula, on the other hand, was stunning! In outline, it looked remarkably like the picture at wikipedia, but without the colour. When I switched to using a filter (a narrow bandpass Lumicon UHC filter) it became even clearer. Not only could I clearly see the shape of the nebula but I could also see structure all the way through the cloud. It looked even better after I'd been viewing it for a while, probably as my eyes became better adapted to the dark. This was the best thing I've seen through a telescope ever!

Almach

I'll mention the last thing I looked at: γ Andromedae, otherwise known as Almach. It's a binary star system that can't be resolved with the naked eye. I used a wide field of view lens and was disappointed to see that it still looked like a single star. But then I switched eyepieces and I saw it - a beautiful pair of stars, one brighter and yellowy orange and the other deep blue. You read about the colour of stars but it's often a disappointment. A single star on its own tends to look white with a hint of colour because the eye doesn't register colour well in such low light conditions. But when you see two contrasting stars so close together it makes a world of difference. The deep blue of the smaller star was unmistakable and the blue really was astonishingly blue. That must be quite a sight for the γ Andromedans. But I should add that the blue star is in fact a triple star system in its own right, so γ Andromedae is actually a quadruple star system. I was unable to resolve more stars than two (and you can only tell there are four by inference from spectrography, not direct viewing).

I also tried to find the Crab Nebula. I'm 99% sure that I was pointing at the right part of the sky and I did in fact see a faint smudge just at the limits of my perception. I had a jiggle the telescope around a bit just to be sure I wasn't imagining it, but sure enough, it appeared to be attached to the sky, not the scope or my eyes. But it certainly didn't look like the pictures.

Anyway, now you have a better idea of what you can see from a city.

Labels: astronomy