Caustics and Catastrophes

Caustics, at least the optical ones, are the bright regions you see when light passes through a refracting medium. Examples include the patterns you see on the bottom of a pool, the bright patches you might see on a wall opposite a curved window, the focal point of a lens when a parallel beam falls onto it, or the familiar cardioid shape you see in a cup of coffee. The twinkling of stars are caustics caused by atmospheric turbulence passing across your retina. Caustics aren't always optical effects. For example rogue waves at sea can be caused by refraction-like effects as waves move between regions of sea with different currents.

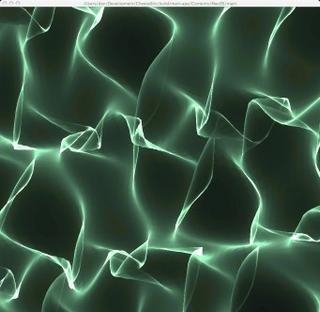

The shape of a caustic is a complicated function of the physical system. But whatever the system you frequently end up with the same features. For example in my image above you see many regions where two twisty lines meet forming a kind of long twisty V shape where the sides of the V protrude a little past the intersection to make a kind of swallowtail shape. This shape is universal. You'll see it in caustics formed by all kinds of refracting media. You'll see it in the kind of caustics formed by the gravitational fields of astronomical bodies in microlensing events. There are other characteristic shapes that frequently come up and what makes them so interesting is that they are independent of the precise details of the physical system. The underlying explanation is catastrophe theory. Unfortunately, few people talk about catastrophe theory these days as it became tarnished through misapplication. I guess it was the chaos theory of the 70s. But like chaos theory, it has completely sound mathematical underpinnings.

Very roughly, in catastrophe theory we look at (infinitely) differentiable functions f:M->N where M and N are manifolds. The size of the set f-1(x) may vary with x depending on how f 'folds' M into N. Obviously the map from x to the size of f-1(x) is discontinuous if it's non-constant on a connected manifold N. Catastrophe theory is basically about the singular points where this set jumps in size. The interesting thing is that in low dimensions, then there are basically 7 types of 'interesting' singularity up to local diffeomorphism. This is known as Thom's theorem. These 7 types have interesting names from the simple 'fold', through the swallowtail to the parabolic umbilic. And you've seen the swallowtail already, it's what I pointed out in the caustic image above. The reason why these catastrophes appear in caustics is that you can think of an optical system mapping incoming rays to the destination surface on which they eventually impinge. For non-trivial systems this is a many-to-one function and it can fold back on itself in all sorts of interesting ways. Thom's Theorem therefore allows us to classify the types of shapes we may see in caustics without knowing specific details about the system.

Anyway, I was interested in finding a way to generate caustic-like images cheaply. Armed with this knowledge that caustics can be seen as folds of some manifold mapping to another I was able to produce I really cheap algorithm. Basically I take the plane. I deform it using a random function (eg. Perlin noise or something synthesised in the Fourier domain). I then render the deformed plane. I do this by tesselating the plane as triangles. Each triangle in the tesselation represents a beam with triangular cross section. So the key thing is to ensure that the amount of light going through each triangle is the same before and after deformation. In other words, if the deformation makes a triangle twice as large I make it half as bright and vice versa. That's it. Animate the deformation nicely and you get something that looks just like the patterns at the bottom of a pool rendered in real-time and without have to use any knowledge of optics. It essentially looks like caustics simply because of the universality of catastrophes meaning that a wide variety of randomly made up deformations look like the right sort of thing. This paper does something similar except that they attempt to approximate the correct caustic for a given water surface whereas I'm just trying to capture a look. The image above is an example.

Catastrophe was used in the 70s for things like modelling conflict, understanding the difference between erotica and pornography, predicting when prison riots will happen and so on. You can see why the subject fell out of favour. As I say, it was the Chaos theory of its day.

Incidentally, you may wonder what deeper significance the number 7 has here. It turns out the catastrophes are each closely tied to Dynkin Diagrams. Dynkin diagrams have a bizarre habit of popping up in all sorts of branches of mathematics - quivers being a popular one right now. But at this point I really have no clue what's going on as I've barely read a few pages of my Catastrophe Theory book.

Labels: mathematics